Introduction to Web Scraping with Python

As the internet continues to grow rapidly, so does the amount of data available on it. This data can be useful for various purposes, such as market research, competitor analysis, or even for personal interests. However, manually collecting data from websites can be tedious and time-consuming. This is where web scraping comes in handy.

Web scraping is the process of automating the extraction of data from websites. Python is one of the most popular programming languages for web scraping due to its simplicity, flexibility, and the availability of numerous libraries specifically designed for web scraping.

In this article, we will explore the basics of web scraping with Python. We will look into the HTML structure of websites and how to use Python libraries to extract data from them. By the end of this article, you will have a solid understanding of how web scraping works and how to use Python for web scraping.

Before we proceed, it’s important to note that web scraping can be a controversial topic, and it’s essential to follow ethical and legal guidelines while scraping data from websites. Always make sure to review the website’s terms and conditions and obtain permission before scraping any data. Additionally, avoid overloading websites with requests as it can lead to server overload and legal issues.

Now that we have covered the basics, let’s dive into the world of web scraping with Python.

Understanding HTML Structures for Scraping

Before we start scraping data from websites, it’s essential to understand the HTML structure of websites. HTML (Hypertext Markup Language) is the standard markup language used to create web pages. It consists of tags that define the different elements and content on a web page.

To extract data from a website, we need to understand the HTML structure of the web page and identify the specific tags that contain the data we want to extract. Here’s an example of the HTML structure of a web page:

<html>

<head>

<title>Web Scraping Example</title>

</head>

<body>

<h1>Welcome to my website</h1>

<p>This is a paragraph of text.</p>

<ul>

<li>List item 1</li>

<li>List item 2</li>

<li>List item 3</li>

</ul>

</body>

</html>

In the above example, we can see that the HTML document consists of an opening <html> tag, followed by the <head> and <body> tags. The <head> tag contains information about the web page, such as the title, while the <body> tag contains the visible content of the web page.

Within the <body> tag, we have various elements, such as the <h1> tag for headings, <p> tags for paragraphs, and <ul> and <li> tags for lists.

To extract data from a web page, we need to identify the specific tags that contain the data we want to extract. For example, if we want to extract the list items from the above example, we can use the following Python code:

import requests

from bs4 import BeautifulSoup

url = 'https://example.com'

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

list_items = soup.find_all('li')

for item in

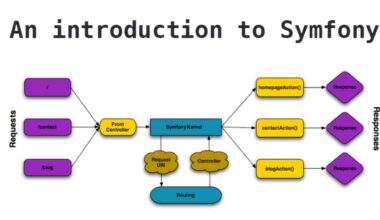

Using Python Libraries for Web Scraping

Python has a vast array of libraries that can be used for web scraping. Here are some of the most popular libraries used for web scraping with Python:

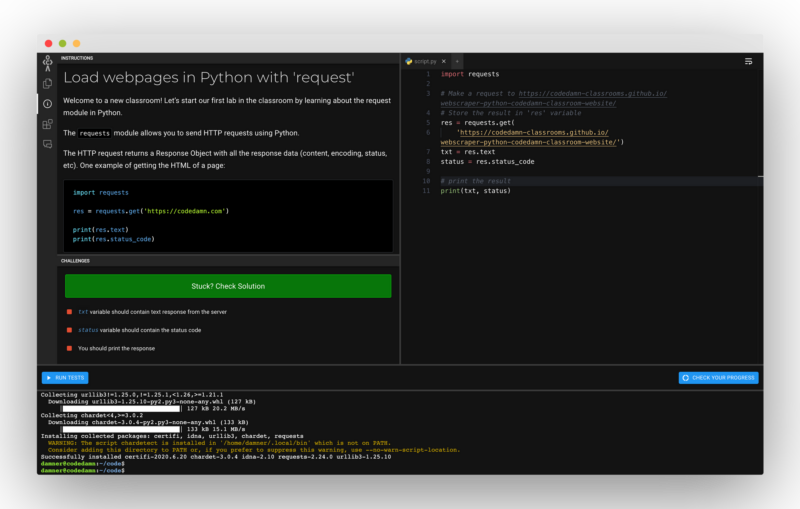

1. Requests

The Requests library is used to send HTTP requests to websites. It is a simple and elegant library that can be used to retrieve website data. With the help of this library, you can easily send GET and POST requests. Here’s an example:

import requests url = 'https://example.com' response = requests.get(url) print(response.text)

The above code will retrieve the HTML content of the website and print it on the console.

2. Beautiful Soup

Beautiful Soup is a Python library used for web scraping purposes to pull the data out of HTML and XML files. It creates a parse tree from page source code that can be used to extract data in a hierarchical and more readable manner. Here’s an example:

import requests from bs4 import BeautifulSoup url = 'https://example.com' response = requests.get(url) soup = BeautifulSoup(response.text, 'html.parser') title = soup.title.string print(title)

The above code will extract the title of the website and print it on the console.

3. Selenium

Selenium is a web driver tool that allows you to automate web browsers. It can be used for web scraping purposes as well. Selenium provides a way to automate interaction with web pages and can be used to scrape data from websites that require user interaction, such as clicking buttons or filling out forms. Here’s an example:

from selenium import webdriver url = 'https://example.com' driver = webdriver.Chrome() driver.get(url) title = driver.title print(title) driver.quit()

The above code will open the website using the Google Chrome browser and extract the title of the website.

4. Pandas

Pandas is a popular Python library used for data manipulation and analysis. It can also be used for web scraping purposes. With the help of Pandas, you can easily read HTML tables from web pages and convert them into data frames. Here’s an example:

import pandas as pd

url = 'https://example.com/table.html'

dfs = pd.read_html(url)

for df in dfs:

print(df)The above code will retrieve all the

Extracting Data from Websites with Python

Now that we have understood the HTML structure of a webpage and have seen some of the popular Python libraries used for web scraping, let’s dive into how to extract data from websites with Python.

To extract data from a website, we first need to send a request to the website using the Requests library. Once we have received the response, we can use the Beautiful Soup library to parse the HTML content and extract the data we need.

Let’s say we want to extract the current temperature of a city from a weather website. First, we need to inspect the webpage and identify the HTML tag that contains the temperature data. Suppose the temperature is displayed in a div tag with class “temp”. We can use the following Python code to extract the temperature data:

import requests

from bs4 import BeautifulSoup

url = 'https://example.com/weather'

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

temp_div = soup.find('div', {'class': 'temp'})

temperature = temp_div.text

print('Current temperature:', temperature)The above code will send a request to the weather website and extract the temperature data from the div tag with class “temp”.

Similarly, we can extract data from tables, lists, and other HTML elements using Beautiful Soup. Let’s say we want to extract the top 10 movies from IMDb. We can use the following Python code to extract the movie titles and ratings from the webpage:

import requests

from bs4 import BeautifulSoup

url = 'https://www.imdb.com/chart/top'

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

movie_table = soup.find('table', {'class': 'chart full-width'})

movie_rows = movie_table.find_all('tr')

for row in movie_rows[1:11]:

title_column = row.find('td', {'class': 'titleColumn'})

title = title_column.a.text

rating_column = row.find('td', {'class': 'ratingColumn'})

rating = rating_column.strong.text

print(title, '-', rating)The above code will extract the top 10 movie titles and ratings from the IMDb website and print them on the console.

In addition to Beautiful Soup, we can also use other Python libraries such as Scrapy, PyQuery, and LXML for web scraping purposes.

It’s important to note that some

Final Thought: Best Practices for Web Scraping with Python

Web scraping can be a powerful tool for extracting valuable data from websites, but it’s important to follow ethical and legal guidelines while scraping data. Here are some best practices to keep in mind while web scraping with Python:

1. Respect website terms and conditions:

Always make sure to review the website’s terms and conditions and obtain permission before scraping any data. Some websites explicitly prohibit web scraping, and scraping data from such websites can lead to legal issues.

2. Use appropriate headers:

When sending requests to websites, make sure to use appropriate headers in your requests. This includes setting the user-agent header to identify your scraper and any other headers required by the website.

3. Avoid overloading websites with requests:

Sending too many requests to a website can lead to server overload and legal issues. Make sure to limit the number of requests you send and use appropriate pauses between requests.

4. Use appropriate scraping techniques:

Some websites may use techniques such as CAPTCHA to prevent web scraping. Make sure to use appropriate techniques such as rotating IP addresses and using CAPTCHA-solving services if required.

5. Handle errors gracefully:

Web scraping can be an error-prone process, and it’s important to handle errors gracefully. Make sure to handle exceptions and errors in your code and log them appropriately.

6. Use appropriate data storage techniques:

Once you have extracted data from a website, make sure to store it appropriately. This includes using appropriate file formats and storage locations and encrypting sensitive data if required.

7. Use appropriate libraries:

Python has a vast array of libraries that can be used for web scraping, but it’s important to use appropriate libraries for your use case. Make sure to use libraries that are actively maintained and have good documentation.

By following these best practices, you can ensure that your web scraping activities are ethical, legal, and effective. Happy scraping!